【www.zhangdahai.com--其他范文】

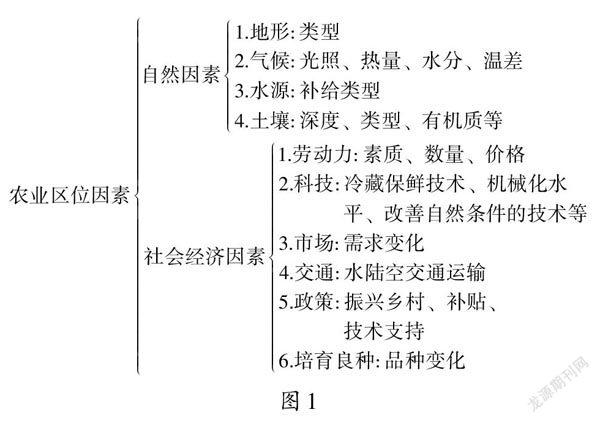

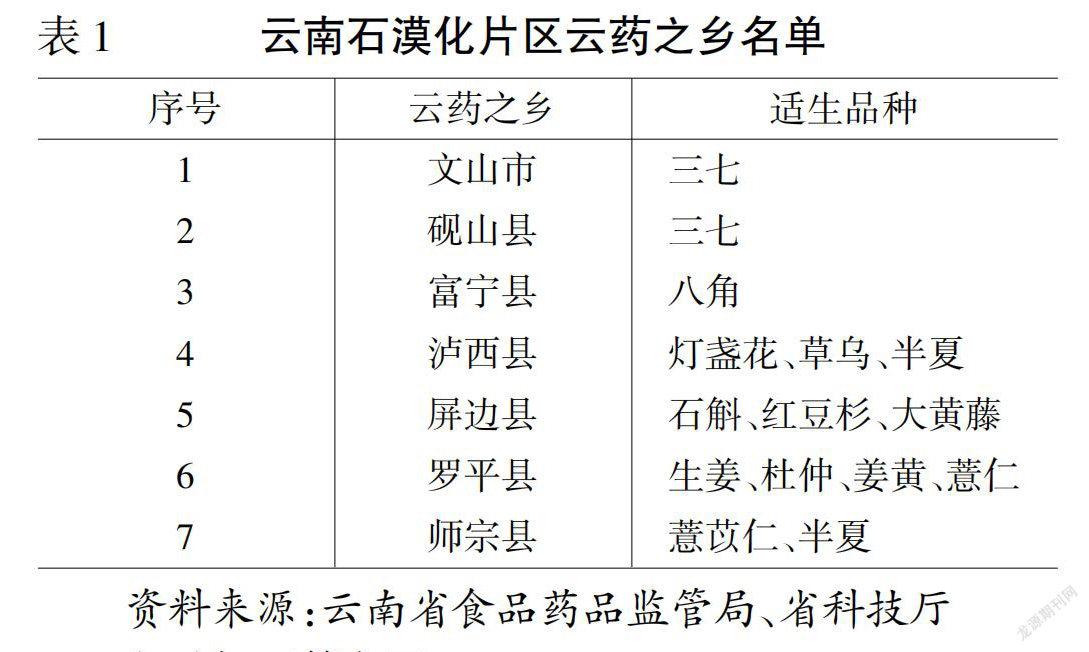

Lijun Wang,Jiayao Wang,Zhenzhen Liu,Jun Zhu,Fen Qin

a Henan Industrial Technology Academy of Spatio-Temporal Big Data,Henan University,Kaifeng 475004,Henan,China

b College of Geography and Environmental Science,Henan University,Kaifeng 475004,Henan,China

c Key Laboratory of Geospatial Technology for the Middle and Lower Yellow River Regions,Ministry of Education,Henan University,Kaifeng 475004,Henan,China

d Key Research Institute of Yellow River Civilization and Sustainable Development,Ministry of Education,Henan University,Kaifeng 475004,Henan,China

e Henan Technology Innovation Center of Spatial-Temporal Big Data,Henan University,Kaifeng 475004,Henan,China

Keywords:Land use and crop classification Deep learning High-resolution image Feature selection UNet++

ABSTRACT High-resolution deep-learning-based remote-sensing imagery analysis has been widely used in land-use and crop-classification mapping.However,the influence of composite feature bands,including complex feature indices arising from different sensors on the backbone,patch size,and predictions in transferable deep models require further testing.The experiments were conducted in six sites in Henan province from 2019 to 2021.This study sought to enable the transfer of classification models across regions and years for Sentinel-2A (10-m resolution) and Gaofen PMS (2-m resolution) imagery.With feature selection and up-sampling of small samples,the performance of UNet++architecture on five backbones and four patch sizes was examined.Joint loss,mean Intersection over Union(mIoU),and epoch time were analyzed,and the optimal backbone and patch size for both sensors were Timm-RegNetY-320 and 256 × 256,respectively.The overall accuracy and F1 scores of the Sentinel-2A predictions ranged from 96.86% to 97.72%and 71.29% to 80.75%,respectively,compared to 75.34%-97.72% and 54.89%-73.25% for the Gaofen predictions.The accuracies of each site indicated that patch size exerted a greater influence on model performance than the backbone.The feature-selection-based predictions with UNet++architecture and upsampling of minor classes demonstrated the capabilities of deep-learning generalization for classifying complex ground objects,offering improved performance compared to the UNet,Deeplab V3+,Random Forest,and Object-Oriented Classification models.In addition to the overall accuracy,confusion matrices,precision,recall,and F1 scores should be evaluated for minor land-cover types.This study contributes to large-scale,dynamic,and near-real-time land-use and crop mapping by integrating deep learning and multi-source remote-sensing imagery

The growing demand for sustainable land use and food security for an increasing global population necessitates close monitoring of land cover and agricultural activities.With the rapid development of remote sensing (RS) technology and deep-learning (DL)algorithms,spatial information extracted from satellite images has been widely used for various applications [1].The large amounts of multi-source imagery now available provide detailed information about the land surface,opening new avenues for land-use and crop mapping.

Automatic information classification is becoming a fundamental technology for agricultural management and environmental protection [2].However,the characteristics used for groundobject recognition,such as shape and texture,can vary between multi-resolution images,raising challenges for automatic classification.Furthermore,diverse imaging conditions usually lead to marked spectral characteristic differences between RS images,often impairing the precise separability of complex land-cover types [3].

In recent years,it has become possible to obtain multi-source and multi-temporal RS images over specified geographical areas.Increasing computing capacity and the availability of complex algorithms mean that multi-resolution RS applications are consistently gaining importance,boosted by a growing number of freely available Earth observation datasets [4].One of the requirements of precision farming and crop production is the timely and accurate acquisition of crop information over large areas,which can inform the decision-support systems of governments.With the successful application of DL methods to computer vision,researchers have made a great effort to transfer their superior performance to information extraction from diverse RS images[5].To understand landuse and cropping pattern changes,reliable satellite-based methods aided by DL algorithms are used for precision-agriculture decision support.

The Copernicus program developed by the European Space Agency provides high-resolution optical images,including Sentinel-2A (S-2A) and Sentinel-2B (S-2B) with 13 bands and a fused spatial resolution of 10 m.Several studies have shown that Sentinel-2 multispectral data is sensitive to land-use and crop classification[2].Even in a complex agricultural area,the classification accuracy of six summer crop species and abandoned crops based on S-2A was greater than 75% and 98%,respectively [3,6].The Gaofen-1B (GF-1B) and Gaofen-6 (GF-6) satellites were developed as part of the Chinese High-resolution Earth Observation System and were launched in 2018.Both satellites are equipped with two panchromatic and multispectral (PMS) sensors and four wide-field-view (WFV) sensors,and acquire data in four spectral bands ranging from visible to near-infrared light with 2-and 16-m resolution,respectively.Although the Gaofen (GF) system is a valuable data source for precision agriculture and land-cover monitoring,the ground-object recognition capacity of GF PMS images is typically lower than that of images with red-edge and short-wave infrared bands in complex regions owing to the availability of fewer spectral bands.Fan et al.[7] found that Sentinel-2 achieved higher crop classification accuracy (96%-98%) than GF-1 (93%-94%).Ren et al.[8] similarly showed that the accuracy of Sentinel-2 for maize recognition exceeded 88%,in contrast to 85%in the case of GF-1.Thus,the multispectral imagery with red edge band is more valuable for agricultural applications.

In addition to spectral bands,vegetation indices (VIs) and texture features can be used to interpret RS images.The vegetation index reflects the growth of a plant by combining data from multiple bands of satellite imagery.The texture feature includes image surface information and the relationship with the environment,and it is widely used in the scheme of RS image classification.However,the accuracy and computational cost of many machine learning methods suffer from the‘‘curse of dimensionality”arising from the correlation between features of the input dataset[9].Wei et al.[10] used principal-component analysis (PCA) to reduce hyperspectral image dimension and fused texture features to extract 18 categories of land-cover and crops in Honghu and Xiong’an,with respective increases in overall accuracy from 91.05% to 98.71% and 88.86% to 99.71%.Thus,feature selection methods,such as PCA [11] and minimum noise fraction [12],are used to reduce data dimensionality,including spectral,VI,and texture features,and improve RS classification accuracy.

Feature selection involves selecting a subset of sensitive features for machine-learning modeling.The common algorithms include random forest(RF)[13],gradient-boosting decision trees[14],and extreme gradient boosting (XGBoost) [15].XGBoost involves the novel implementation of gradient-boosting decision trees,and was used in the present study to assess feature scores.Yang et al.[16] optimized 10 spectral,15 VIs,and 4 texture features from S-2A images,and identified four land-cover types including corn,soybean,peanut,and urban in Jilin province,with OA and Kappa values of 94.27%and 91.70%,respectively.Chen et al.[17]and Zhang et al.[18]used a feature extractor to generate spectral and semantic features,and demonstrated the superiority of DL methods for extracting crop and land-use information from GF images.Thus,coupling spectral,VIs,and texture features derived by mathematical transformation can improve classification accuracy.

Machine-learning algorithms,such as RF and object-based image analysis,have become the predominant approaches for RS classification [19].However,these methods are largely dependent on prior knowledge available from ground samples as well as the quantity and quality of training samples.Relevant agricultural data are currently available for several countries,such as the Cropland Data Layer published by the United States Department of Agriculture and the Crop Inventory published by Agriculture and Agri-Food Canada.However,such data sets are not readily available in others,including the highly agricultural regions of China.Moreover,manual collection of ground annotations and labeled images from Google Earth heavily depend on field surveys and censuses,which are expensive,labor-intensive,and time-consuming [19].This means that detailed maps of agricultural land use are often highly localized owing to the diversity of cropping types,and publicly available labeled databases for constructing classifiers are often difficult to use.Furthermore,the classification results obtained using pixel-based approaches require manual postprocessing to address the large number of misclassified pixels with similar spectral values.This is known as the ‘‘salt and pepper”phenomenon.

Datasets often have imbalanced class distributions in many common applications owing to differences in the spatial distribution of ground objects,especially crops.This class-imbalance problem affects the accuracy of most machine-learning classification tasks [20].Many methods,such as boosting or adaptive-boosting algorithms,have enabled the use of ensembles of feature selectors to ameliorate the effect of class imbalance on the performance of learning algorithms [14].Several sampling/reweighting schemes and resampling schemes including up-sampling,under-sampling,and hybrid sampling have been investigated to alleviate the imbalance problem[21].For example,by increasing the number of small samples in the training data set,the up-sampling method provides the possibility of addressing class imbalance for more accurate agricultural information.

Since the 2012 ImageNet classification challenge [2],DL has become a semantic segmentation tool focusing on large and deep artificial neural networks.However,the problems of within-class diversity and between-class similarity remain major challenges in RS classification[22].The huge success of DL-based classification methods is due largely to the powerful extraction capability of multiple features from a few annotated samples [22,23].Consequently,many studies have investigated the use of DL,making such methods a better choice for handling complex scenarios,especially the classification of RS imagery [24].Recent advances[25] have further demonstrated that end-to-end approaches can discover intricate relationships from high-dimensional data for object classification.Convolutional Neural Network (CNN) and Recurrent Neural Network (RNN) are both prominent DL architectures[5].CNN methods,such as discriminative CNNs(D-CNNs)and Siamese-prototype network [22,23],have been adopted to extract multilevel features in spatial and spectral domains from RS imagery.

Satellite images based on DL methods provide precise data for large-scale land-use and crop monitoring.Khan et al.[26]reported a classification accuracy of 90% for cultivated and non-cultivated land and approximately 70% for crop classifications based on Landsat-8 imagery.Kussul et al.[27] employed a multilevel DL architecture to achieve accuracies of more than 85%for major crop types (wheat,maize,sunflower,soybeans,and sugar beet) using multi-temporal and multi-source satellite imagery.Xu et al.[28]used a long short-term memory structure to classify corn,soybean,and other crops based on 30-m-resolution Landsat data,reporting OA values of 89.65-94.92% for six different sites.Liu et al.[29]reported marked differences in classification results based on data sets with different spatial resolutions,and the fusion of GF and S-2A images achieved an OA of 92.8%using the Deeplab V3+.Thus,DL methods are made more powerful by use of multiple stacked feature-extraction layers.

The Fully Convolutional Network (FCN) was proposed and developed to make predictions for per-pixel tasks including semantic segmentation [30].Peng et al.[31] found that the FCN showed great application potential in image classification with hierarchical learning according to the different band characteristics of the imagery.The FCN has been successfully applied in diverse agricultural practices,such as precise crop classification from satellite images.UNet++,also known as Nested UNet,was introduced by Zhou et al.[32]based on the FCN architecture.It has been modified and extended to work with fewer training datasets,segment optical images,and yield satisfactory classification results [4].In the field of crop classification,several studies [1,6,10,31] have shown that it is very important to obtain useful information from multiple features.The major advantage of UNet++is the multilevel fullresolution feature map-generating strategy in optical remotesensing imagery [5].In addition to reducing salt-and-pepper phenomena,transferable learning can reduce the computing cost and workload of acquiring ground samples manually.The prediction speed of the pruned network of UNet++is also accelerated [5].UNet++architecture trained in an end-to-end manner is widely used owing to its high efficiency and accuracy of transferable learning.

Following recent advances in deep neural networks,object detection and tracking algorithms with trained backbones have been greatly improved.It is essential to use multiple backbones,such as the Visual Geometry Group (VGG) [33],EfficientNet [34],Residual Networks(ResNet)[35],and RegNet[36],to perform feature extraction for segmentation and classification in RS images.The patch size (pixel resolution) of images and labels also affects the performance of transfer learning.For example,by increasing the image size four times by interpolation,Hirahara et al.[37]improved average classification accuracy from 56.3% to 71.5%.To build on this work,it is necessary to identify preprocessing,feature selection,imbalanced class,model evaluation,post-processing requirements,and comparison of classification results for multisensor data,as well as identify optimal indicators for information classification and segmentation of RS imagery.The comparison methods of classification results include UNet,Deeplab V3+[38],RF and object-oriented classification (OOC).The UNet proposed by Ronneberger et al.[39]used a sliding-window set up to predict the class label of each pixel with only a few training samples and yielded precise semantic segmentation.The DeepLab V3+[38]added a decoder module to refine the boundary details and applied a separable,depthwise convolution in the decoder modules as well as Atrous Spatial Pyramid Pooling (ASPP) to improve the accuracy of deep segmentation and classification.Pixel-based RF is often established as a baseline model and has been shown superior to other machine-learning algorithms.The OOC method then subdivides an image into homogeneous regions to overcome the ‘‘salt and pepper” phenomenon in the classification results.

The main objective of this study was to assess the spatial generalizability of UNet++architecture and increase the accuracy of land-use and crop mapping at multiple spatial resolutions.This assessment included (1) evaluating the importance of spectrum-VI-texture features and determining feature-selection schemes for S-2A and GF PMS imagery;(2) evaluating the training model with five backbones and up-sampling of imbalanced classes;(3)comparing model performance based on loss functions and mean Intersection over Union (mIoU) in step (2),and selecting the optimal backbone to analyze the performance for four patch sizes;and(4) assessing prediction classification accuracies with the optimal backbone and patch size for transfer-learning based on confusion matrices,overall accuracy (OA),and F1scores.Overall,this work aims to offer an improved understanding of deep learning procedures as applied to remote sensing in agricultural applications.

2.1.Study sites

This study focused on land-use and crop mapping in the northern plain of Henan province,China (Fig.S1),which has a warm temperate climate with an annual precipitation ranging from 407 to 1230 mm.Crops in the province are diverse and account for nearly 10% of total grain production in China [40].Winter wheat(WHE) and garlic (GAR) are the two main crop types,with the remaining areas mostly non-cultivated land (NCL) reserved for spring crops,greenhouse (GH) production,and other (OTH) crops,such as rape and onion.Non-cropland cover types include urban(URB,including building,road,and other construction),forest land(FL,including nursery stock planted on cultivated land,and woodland around cities and villages),and water (WAT,including the Yellow River,ditches,and ponds).Six sites were chosen for the transferable deep learning study,each covering an area of~20 km×20 km .Each site included two sample plots,such as C1 and C2 in site C.

2.2.Image and label datasets

The growing season of winter wheat and garlic generally involves sowing in early October and a jointing stage in March the following year.Following a growing stage,these crops mature and are harvested at the end of May.To reduce the impact of other crops planted in late April,such as peanuts and tobacco,cloudless images with similar dates at critical phenological stages were obtained from S-2A and GF satellites from March to May yearly(Table S1).The spectral characteristics of ground objects change little during this period.Owing to the large amount of manual processing in GF PMS images,only typical regions of each site are selected.Both satellites utilize the Universal Transverse Mercator projection.S-2A follows the U.S.Military Grid Reference System(US-MGRS) to set the satellite orbit number,such as T50SKD and T50SKE.The GF name consists of the date and product number.Calibrated and corrected S-2A atmospheric bottom reflectance data were obtained,and the feature bands were calculated and composited in Google Earth Engine (GEE).GF PMS images were obtained from the China Centre for Resources Satellite Data and Application website (http://www.cresda.com/CN/Downloads/dbcs/),and the radiation calibration,atmospheric correction,and feature bands were processed using The Environment for Visualizing Images(ENVI) and Python programming [16,23].

To obtain high-quality samples and ensure efficient model performance,a handheld GPS system was used to obtain ground data each year.Then,an interpretation marks library was established as a reference for artificial vectorization and interpretation.In total,6,12,and 4 sample plots (~1 km × 1 km) and some sample points were investigated in 2019,2020,and 2021,respectively,as shown in Table 1.The major crop is wheat,with the area proportion in the sample plots exceeding 78%.One of the sample plots and some points are illustrated in Fig.1a,b.Owing to differences in spatial resolution,ground-object recognition capabilities,and groundobject variability (Fig.1c-f),the S-2A and GF PMS labels were determined separately,based on the following criteria:

Table 1 Area (km2) of sample plots and sample points for each year.

(1) If a regional crop planting structure was complex and similar to Fig.1a,a large amount of manual vectorization work was required;if it was similar to Fig.1b,RF was used to extract the information,although the results still required subsequent manual editing.WHE,winter wheat;GAR,garlic;NCL,non-cultivated land;GH,greenhouse;OTH,other crops;URB,urban land;FL,forest land;WAT,water.

Fig.1.Ground samples and images,and land-use change in S-2A images for site D in 2019 and 2020.(a) S-2A image and samples at site F in 2019.(b) GF PMS image and samples at site C in 2020.(e) and (f) are classification results corresponding to image (c) and (d),respectively.

(2) The classification results were verified and corrected using the ground survey,including the uncertain classifications from step (1).

(3) For each year,300 sample points were randomly generated including 50 points for each WHE,GAR,and OTH landcover class,and 30 points for each of the other classes,to verify the label accuracy based on the third ground survey and Google Earth.The results showed that there were respectively 7,9,and 6 incorrect points in the 2019,2020,and 2021 datasets.The main misclassifications were between FL (deciduous forest with height <1 m) and the NCL,GAR,and OTH classes;and between NCL (due to sparse weed cover) and OTH.The corrected classification results satisfied the label-production accuracy.

2.3.Patch size and up-sampling categories

The images and labels in DL models are usually clipped to different patch sizes (numbers of pixels) to avoid the memory overflow that results from excessive size of images.Clipping methods include regular-grid,sliding-window,and random-window clipping,and the first two methods can increase the number of images and labels based on a repetition rate.In the present study,with a regular grid and zero repetition rate,the clipped images(in tif format)were consistent with the spatial position and coordinate system of the original images,generating mosaic predicted results in post-processing.Both satellite image bands were stretched to RGB values of 0-255;the patch sizes of S-2A were 32 × 32,64 × 64,128 × 128,and 256 × 256 pixels,with 28,891,7155,1787,and 446 pairs,respectively,and a total area of~2930.688 km2;the patch sizes of GF were 64 × 64,128 × 128,256 × 256,and 512 × 512 pixels,with 37,184,9296,2324,and 562 pairs,respectively,and a total area of~609.223 km2.

A general framework was used to address class-imbalance problems by evaluating the proportion and spatial distribution of the different categories.The pixel proportions of WHE,GH,GAR,URB,FL,NCL,OTH,and WAT of S-2A and GF PMS training data sets were 52.99%and 57.03%,0.27%and 0.69%,2.85%and 4.29%,24.44%and 19.64%,10.80% and 5.86%,5.05% and 9.67%,0.45% and 0.60%,and 3.15% and 2.22%,respectively.Thus,the GH,GAR,OTH,and WAT classes were in lower proportions in both datasets.Despite the NCL of S-2A and the FL of GF PMS also having smaller proportions,their wide spatial distribution meant that up-sampling could be avoided.Accordingly,the GH,GAR,OTH,and WAT classes were up-sampled twice during the training process.

3.1.Feature selection

3.1.1.Feature grouping

GEE provides a convenient way for the rapid calculation of VIs and texture using S-2A imagery.The three bands with 60-m resolution,B1,B9,and B10,were removed during preprocessing.The features were divided into five groups (Table S2),and a subset of features was selected to evaluate the performance of the classification task by comparing the original bands.The VIs and texture determinations were based on Chaves et al.[41] and Haralick[42],respectively.

3.1.2.Band selection for the texture feature

The purpose of PCA is to transform input hyperspectral data into a lower-dimensional subspace to eliminate multicollinearity[10].The output of PCA in ENVI is a set of features that include the mean and standard deviation values.The mean and standard deviation values corresponding to the spectral band of S-2A in Table S2 are shown in Fig.S2.As shown in Fig.S2,near infrared(NIR) and red edge 4 (RE4) yielded higher values in the S-2A images and that NIR had higher values in the GF images.In view of the conclusions of Proisy et al.[43] and Meroni et al.[16],the RE4 band of S-2A and the NIR band of GF PMS were selected to calculate texture features.

3.1.3.Feature importance and selection

The pixel values for each band of S-2A and GF PMS images involved in training and prediction in 2019-2021 were calculated using the XGBoost algorithm and were based upon the ground sample points listed in Table 1.Fig.2a shows the importance scores for 32 features of the S-2A images,while the remaining six features including NDBI,RVI,RRI1,RRI2,MSRre,and Cire were not further evaluated,as their respective scores were below 10.Table S2 shows the normalized difference water index (NDWI) including NDWI5 and modified NDWI (MNDWI).The MNDWI with the higher score was selected.Fig.2b shows the importance scores for 17 features of the GF PMS images,for which the ratio vegetation index(RVI)and the soil adjusted vegetation index(SAVI)were not further evaluated as their scores were <10.

Feature selection preserves the physical meaning of the original features and provides better readability and interpretability for the data model.We designed two different feature schemes for comparison.The first scheme without feature selection (WFS) of S-2A and GF PMS consisted of respectively 10 and 4 spectral bands of group 1 in Table S2.The second scheme with feature selection(FS) of S-2A and GF PMS consisted of respectively 15 (B,R,RE2,RE3,NIR,SWIR1,SWIR2,MNDWI,IBIB,EVI,RENDVI,RE4NDVI,TVI,SAVG,CORR)and 9(EVI,MEAN,B,NIR,VAR,NDWI8,CORR,G,R)feature bands,and these are marked in the blue columns of Fig.2.

3.2.UNet++architecture

The UNet++architecture consisted of convolution blocks,downsampling and up-sampling modules,and skip connections,as illustrated in Fig.3.By adding more intermediate convolutional blocks and densifying the re-designed skip connections between blocks,the UNet++architecture ultimately results in an easier optimization problem and,thus,more accurate classification results[31,32].The convolutional blocks xn,mare introduced to bridge the semantic gap between the contracting and the expanding blocks.

3.3.Backbones

The backbone,which provides a model parameter trained on ImageNet dataset [2],has strong feature-extraction capability[44].It can assist the main network architecture to achieve a higher classification accuracy.In the present study,five backbones including VGG16,EfficientNet-b7,ResNet50,Timm-ResNet101e,and Timm-RegNetY-320 from the segmentation_models_pytorch library(https://github.com/qubvel/segmentation_models.pytorch) were used to evaluate performance with respect to land-use and crop classification from the S-2A and GF images.

The VGG-16 model [33] was developed for image recognition,and has demonstrated high performance in many applications.EfficientNet was developed by Tran et al.[34],and includes optimized network width,depth,and resolution.ResNet [35] adopts residual connections to improve model performance,and has five versions with different layers (ResNet-18,ResNet-34,ResNet-50,ResNet-101,and ResNet-152) to reduce the optimization problem and enable the training of much deeper networks.RegNet was originally proposed by Radosavovic et al.[36] in 2020 and the widths and depths in the networks can be explained by a quantized linear function.

3.4.Model evaluation

3.4.1.Loss function

The PyTorch machine learning library developed by Facebook AI Research(FAIR)was used in the implementation given its ability to compute loss and perform validation from its repository.The crossentropy loss function(Eq.(1))can be used to calculate the task loss of multiple categories [45].Label smoothing (Eq.(2)) is used to reduce the occurrence of overfitting and it considers the loss of the correct and incorrect labels in the training sample.Thus,the label smoothing cross-entropy loss function (Eq.(3)) was used for backpropagation,and considered the relationships between different classes.

Fig.2.Feature importance scores of different feature groups of S-2A and GF PMS images.

where ykis ‘‘1” for the ground-truth label and ‘‘0” for the wrong label,andis the likelihood that the model assigns to the k th class,N is the number of categories.

Dice coefficient loss (DCL,Eq.(4)) can improve segmentation and weaken the class imbalance problem[46].The joint loss function (Eq.(5)) of the label smoothing cross-entropy and DCL was used to evaluate model performance:

where y and ^y denote the ground-truth label and the predicted label,respectively;

where wCEand wDLare the weight coefficients of label smoothing cross-entropy and Dice loss,respectively,both of which were set to 0.5.

3.4.2.mIoU

For multiple classes,the mIoU(Eq.(6))is calculated as the mean IoU of each category to measure segmentation accuracy.In this study,the joint loss and mIoU results were compared to evaluate the model performance in the UNet++architecture.

where k is the number of classifications,pijis the number of pixels that belong to class i but are classified as class j,pjiis the number of pixels that belong to class j but are classified as class i,and piiis the number of pixels in the overlapping area of class i.

Fig.3.UNet++architecture including dense connections.The input is the 512 × 512 patch size of S-2A images with B/G/R bands.The dense skip connections are shown in black and green and the output results with deep supervision are shown in red.

3.5.Baseline classification models

In addition to the model evaluation,UNet,Deeplab V3+,RF and OOC were selected to compare the predicted results.In this study,the performance metrics of the predicated segmentation were evaluated based on overall accuracy (OA),recall and precision(Eq.(7)),and F1score (Eq.(8)) as well as confusion matrices.

where TP,FP,FN,and TN are true positive,false positive,false negative,and true negative classifications,respectively.

3.6.Experiment design

The overall experimental workflow included three stages: data preprocessing,modeling,and evaluation(Fig.4).To improve model generalization,all of the image labels were used as training datasets and validation datasets with different enhancement methods.The training data enhancement strategy involved random horizontal flip,vertical flip,diagonal flip,and 5%linear stretching.The random 0.8%,1%,or 2%linear stretching was applied to the validation data.Historical data from multiple sites (2019 and 2020) were used to build the models,and the optimal model was used to extract information for selected sites for 2019-2021 to assess the spatio-temporal applicability.The 2021 prediction and accuracy results (without the training datasets) were used to demonstrate the performance and generalizability of the DL model.Finally,S-2A and GF PMS imagery was used to produce 10-m and 2-m resolution maps.

For the satellite image segmentation,the optimal backbone for a specific patch size,such as 64×64 for S-2A and 256×256 for GF,was determined among five possible backbones.Then,the accuracies of different patch sizes with the optimal backbone were used to evaluate the feasibility of the feature selection schemes.Finally,the applicability of the DL model was characterized by evaluating the classification accuracy of the prediction results.All networks were trained using the Adam optimizer with a cosine decay learning rate schedule [47],and the initial learning rate and weight decay were set to 0.0001 and 0.001,respectively.Other model parameters were set as follows: T_0 (the number of epoch for the first restart)=2;T_mult (a factor that increases after a restart and controls the speed of learning rate)=2;eta_min(the minimum learning rate)=0.00001;the batch size=16;and the initial epoch=100.To prevent overfitting,the number of epochs during the training was determined based on the validation data and the loss and mIoU of the training data.When the loss and mIoU were not improved upon relative to the previous 10 consecutive runs,the network training was stopped.The modeling process was performed on a Windows Server workstation with two Intel Xeon E5-2697A processors,256 GB of RAM,and eight NVIDIA Tesla P100-SXM2 graphics cards (16 GB of RAM).All the models were implemented on the Python platform using the PyTorch library for DL classification,and the GDAL library was used for image processing.

4.1.Model performance for the S-2A datasets

4.1.1.Optimal backbone analysis

The joint loss and mIoU metrics were used to evaluate the performance of the DL model;when backbone ‘A’ has lower loss and higher mIoU values than backbone‘B’,the prediction results based on backbone ‘A’ will most likely achieve a higher OA and F1score.For the 64×64 patch size of the S-2A imagery,the training dataset of 7155 pairs was increased to 16,435 pairs with two-time upsampling.The joint loss,mIoU and other indicator results,such as total epoch and training time for each backbone and scheme,are shown in Fig.5a-e.The mIoU results with FS were higher than those without FS.In addition to VGG16,the model showed more distinct and differentiated segmentation performance between both schemes.After approximately the 32nd epoch,the result curves for FS became smoother and more stable.The five backbones required~71 epochs to accomplish the training process,and optimal performance was obtained by the~62nd epoch.

Fig.4.Experimental workflow with transferable deep learning.The preprocessing,modeling,and accuracy evaluation of the prediction results are described.Post-processing involved mainly format conversion of the predicted results(from*.png to*.tif)and mosaic results based on the spatial coordinate system information of the original images.

The more specific parameter results in Fig.5a-e shows that the total epoch (TE) number and optimal results for the two schemes were very similar.The loss values of the WFS-based VGG16,EfficientNet-b7,ResNet50,Timm-ResNet101e,and Timm-RegNetY-320 were respectively 0.2%,0.5%,0.7%,0.9%,and 0.9%higher than the FS-based.The corresponding mIoU values were respectively 0.3%,0.8%,1.0%,1.6%,and 0.9% lower than the FSbased.These results indicate that the feature selection in the DL model improved not only the segmentation accuracy but also the stability of the training process.Among the five backbones,Timm-RegNetY-320 with the FS scheme had the lowest loss value(0.376) and the highest mIoU (0.756).Radosavovic et al.[36] suggested that RegNet provides the simplest and fastest networks that work well across a wide range of flop regimes,and outperform other popular models including EfficientNet,ResNet50,and ResNet101.Run time is also a critical indicator for evaluating model performance on large data sets [44].However,owing to the limitation of crop data collection capability,the training data set of crops is relatively small and gives an acceptable model running time in remote sensing.The total epoch time ranged from~309 (VGG16) to~490 min (EfficientNet-b7) for the five backbones.The mean epoch time of each backbone showed little difference between both schemes.Consequently,the Timm-RegNetY-320 was selected as the optimal backbone for the patch sizes of the S-2A imagery.

4.1.2.Patch size analysis

The training dataset pairs at patch sizes of 32 × 32,64 × 64,128 × 128,and 256 × 256 for both schemes were increased from 28,891,7155,1787,and 446 to 41,431,16,435,4177,and 1230,respectively.Fig.5f-i shows the training results of joint loss,mIoU and other indicators for four patch sizes of the S-2A data sets with Timm-RegNetY-320.The model performance using FS was significantly better than that using WFS at patch sizes of 32 × 32 and 64 × 64.With the increase in patch size,the results for the two schemes became increasingly similar.The mIoU values showed a significant increasing trend,while the loss results first decreased and then increased.The differences in the results of all listed parameters between both schemes were the smallest for the 128 × 128 patch size,with loss and mIoU values of 0.001 and 0.002,respectively.At a patch size of 256 × 256,the mIoU was increased in comparison with the 128 × 128 patch size,whereas the loss value was higher.

The total and stop epochs of both schemes were similar and the total run time markedly decreased as the patch size increased;the total run time WFS decreased from 562.99 to 150.07 min,in contrast to a decrease from 613.49 to 149.38 min with FS.Thus,based on the UNet++architecture with Timm-RegNetY-320 and a 256 × 256 path size,optimal classification of S-2A images could be obtained using the FS scheme.

4.2.Model performance of GF PMS datasets

4.2.1.Optimal backbone analysis

For the GF PMS imagery,the training dataset of 2324 pairs was increased to 3928 pairs after two-time up-sampling.The results of loss,mIoU,epoch number and training time for each backbone and scheme are shown in Fig.6a-e;the results for FS were superior than those for WFS.The area covered by the GF PMS dataset was 20.79% of the S-2A coverage,and the total training time and average epoch time were lower than those of the S-2A analysis.

The specific parameter results in Fig.6a-e show that except for ResNet50 WFS,the total number of epochs and stop epochs were almost the same: approximately 72 and 62,respectively.Thus,the DL model with Timm-RegNetY-320 based on FS achieved the lowest loss (0.342) and highest mIoU values (0.818).Accordingly,Timm-RegNetY-320 was selected as the optimal backbone to characterize the relative performance of the different patch sizes.

Fig.5.Training parameter results of multiple backbones and patch sizes for both S-2A schemes with and without feature selection.(a-e) Results of five backbones.(f-i)Results with Timm-RegNetY-320 backbone at different patch sizes.TE,total epoch;SE,stop epoch;OL,optimal loss result at SE;OM,optimal mIoU result at SE;TT,total time(min).

4.2.2.Patch size analysis

The training dataset pairs at patch sizes of 64× 64,128 × 128,256×256 and 512×512 for both schemes increased from 37,184,9296,2324,and 562 to 45,436,12,812,3928,and 1252,respectively.Fig.6f-i shows the training results for four patch sizes of the GF PMS datasets with Timm-RegNety-320.Model performance with FS had a lower loss and higher mIoU values than those without FS at the same patch size.With the increase in patch size,the results of the schemes became increasingly similar.The results also show that a larger patch size is not always best,with the initial improvement followed by a decline.Thus,the optimal patch size for the GF PMS imagery was 256×256,which achieved the lowest loss (0.342) and highest mIoU values (0.818) based on the FS scheme.

4.3.Prediction results

4.3.1.S-2A segmentation and classification results

With Timm-RegNetY-320 and a patch size of 256 × 256,all 4,194,304 pixels of the prediction results based on FS in each site were verified.The results of UNet++showed significantly higher OA and F1scores than those of the UNet,Deeplab V3+,RF,and OOC algorithms (Fig.7a-d).The OA and F1score values for the UNet++-based results of each site were all higher than 96% and 71%,respectively.The OA and F1score values of the UNet model at site E in 2021 were 92.90%and 70.03%,respectively;these were lower than those of the UNet++model.In addition to the UNet results of site E in 2021,the OA and F1score values of other sites based on UNet,Deeplab V3+,RF,and OOC were all lower than 90% and 70%,respectively.The accuracy based on UNet and DeepLab V3+was generally higher than that of RF and OOC.Thus,the OA and F1scores of UNet ++model demonstrate its relative advantages in generating valid and complete prediction results.

The DL model UNet++-based showed relatively stable performance for different transfer sites.On one hand,compared to the reference results,the classification results showed lower ‘‘salt and pepper” issues than the RF results,especially for the GAR land-cover class at site F in 2019 and 2021,FL at site D in 2020,and URB at site E in 2021.On the other hand,OOC with a scale level of 150 also reduced‘‘salt and pepper”phenomena and ensured the integrity of the patch to a certain extent by comparing the segmentation results with scale levels of 130 and 170.

4.3.2.GF segmentation and classification results

For the GF PMS images based on FS,the UNet++architecture with the Timm-RegNetY-320 backbone and a patch size of 256 × 256 always outperformed the UNet,Deeplab V3+,RF,and OOC models (Fig.7e-h).The total pixel number of site F in 2019,site B in 2020,and sites E and F in 2021 were 14,942,208,14,942,208,10,616,832,and 11,206,656,respectively.The OA and F1score values of the UNet++-based results for site F in 2019,site B in 2020,and sites E and F in 2021 were 75.34% and 54.89%,97.72% and 73.25%,87.66% and 63.28%,and 91.17% and 61.54%,respectively,which were all higher than the other four classification results.The classification result of OOC was segmented at a scale level of 30 by comparing the accuracy to the scale level of 20 and 40.However,in contrast to the S-2A classification results,the accuracy values based on UNet and Deeplab V3+were not superior to the RF and OOC methods,and were even lower than the accuracies of RF and OOC at site F in 2019.The reference result at site F in 2019 in Fig.7e showed the complexity of crop planting and the high fragmentation of the field.

Fig.6.Training parameter results of different backbones and patch sizes for both GF schemes with and without feature selection.(a-e)Results of five backbones.(f-i)Results with Timm-RegNetY-320 backbone at different patch sizes.TE,total epoch;SE,stop epoch;OL,optimal loss result at SE;OM,optimal mIoU result at SE;TT,total time(min).

The OA values and F1scores of the DL model for site F in 2019 were all lower than those of the other sites in 2020 and 2021.This finding may reflect the lower number of bands of the GF PMS images and the more complex crop planting structure,especially in the case of the WHE,GAR,and NCL classes.Garlic is typically covered with plastic film when planted,possibly leading to errors in the GH and NCL classifications.The high-resolution GF images may contain more ground-object information,but this may also increase spatial heterogeneity [4].The four red-edge bands of S-2A provide important data for complex crop classifications.As a result of these factors,the OA values and F1scores of the GF PMS results were lower than those of the S-2A results.

4.4.Comparison results of different up-sampling methods

To further clarify the performance of the up-sampling method for small samples,the model parameters and prediction accuracy of the S-2A and GF PMS data sets were compared and analyzed based on the optimal backbone and patch size.Without upsampling of small samples,the total epoch,stop epoch,and total time of S-2A and GF PMS were 72 and 71,62 and 61,and 82.07 and 257.68 min,respectively,with optimal loss and mIoU at stop epoch being 0.498 and 0.391,and 0.712 and 0.662,respectively.The OA and F1score values of S-2A prediction classification without up-sampling at site F in 2019,site D in 2020,and sites E and F in 2021 were 88.92 and 59.22,69.74 and 41.67,86.98 and 64.32,and 90.27 and 67.27,respectively;and those values of GF PMS at site F in 2019,site B in 2020,and sites E and F in 2021 were 65.59 and 37.56,94.67 and 50.53,81.68 and 47.22,and 83.17 and 48.11,respectively.Compared with the results in Fig.5i and Fig.6h,the mIoU values of both training models were more than 14%lower than those with up-sampling,and the prediction accuracies were also lower than those with up-sampling.

Fig.8 shows the local classification results of the S-2A and GF PMS images with and without up-sampling of the small samples based on UNet++architecture.The classification results were achieved using Timm-RegNetY-320,a patch size of 256 × 256,and feature selection,which achieved more accurate classification results than those obtained without up-sampling.As shown in Fig.7,winter wheat as the main crop showed higher classification accuracy.Taking site F in 2019 of S-2A and GF as an example,the mixed spectral characteristics produced by lower vegetation coverage and mulching film affected the classification accuracy of garlic.Moreover,there were misclassifications among the greenhouse,non-cultivated land,and urban areas.The similar spectral features are the main factors affecting their classification accuracies.Compared with the S-2A classification results,the GF results in Fig.8 also show that high-resolution images yielded stronger extraction capability in spatial details such as field roads,small crop plots near villages,and fine segmentation of fields.

Fig.7.Classification results and evaluation indicators of UNet++,UNet,Deeplab V3+,RF,and OOC for the study sites in 2019,2020,and 2021.(a-d)S-2A classification results.(e-h) GF PMS classification results.

4.5.Comparison results of different patch sizes

As shown in Fig.5f-i and Fig.6f-i,the difference in loss values between FS and WFS at the same patch size for the S-2A and GF PMS imagery were all lower than 0.7%.Given such small differences,it is necessary to verify the accuracy of the prediction results of the different models.As listed in Table 2,the OA differences for both patch sizes based on the WFS scheme ranged from -0.94% to 7.83%.For the S-2A classification results,the OA and F1score values with FS at a patch size of 128 × 128 were even lower than those without FS at Site F in 2021.Taking the S-2A predictions at patch sizes of 128 × 128 and 256 × 256 as an example,the local results in Fig.9 intuitively reflect the differences between the prediction and reference results.Based on the FS scheme,Timm-RegNetY-320,and a 256 × 256 patch size,the OA values of all S-2A prediction results exceeded 96%and the F1scores ranged from approximately 71% to 81%(Table 2).Except for the 75.34% OA value of site F in 2019,all of the GF OA values were higher than 87%,and the F1scores range between 54% and 74%.Therefore,S-2A and GF images with feature selection achieved better prediction results at a patch size of 256 × 256.

Table 2 OA and F1 scores for S-2A and GF PMS imagery for both feature selection and without feature selection schemes.In 2020,site D/B represents the S-2A predictions of site D and the GF PMS results of site B,respectively.

5.1.mIoU and average epoch time with different patch sizes

Figs.5 and 6 show the influence of five backbones and patch sizes on the model performance.The patch size increase plays a more importance role in the model accuracy and training time,as shown in Fig.10.However,for the GF data set,the model performance did not improve with increased patch size.Note that training was not possible for the S-2A imagery with a patch size of 512× 512,owing to the small number of training-set pairs.Based on these results,patch size had a greater influence on DL model performance and classification accuracy than backbone choice.Specifically,the training datasets at larger patch sizes can capture more features of the predicted target,and perform better at recognizing ground objects[4].However,the GF PMS results show that alarger patch size does not equate to higher accuracy.Rather,the optimal patch size for both the S-2A and GF PMS analyses was 256 × 256.

Fig.10.Comparison of the mIoU and average epoch time for both S-2A and GF PMS schemes with feature selection and without feature selection.

5.2.Classification evaluation at different patch sizes

Fig.9 shows that the forest land with FS at a patch size of 128 × 128 shows an obvious misclassification by comparing the result without FS at a patch size of 256×256.In addition,the predictions without FS at a patch size of 256 × 256 show clear misclassifications,especially between field roads and the GAR class due to the spectral influence of winter wheat.However,the OA and F1score values of S-2A and GF PMS imagery at a patch size of 256 × 256 with FS are all higher than those without FS,reflecting the ability of the trained model to fit the training data.Therefore,we should first determine the optimal patch size of the training data set in the deep-learning model,before fusing the training data set with a feature selection method to obtain better classification results and accuracy.

5.3.Evaluation indicators for DL classification results

As listed in Table 2,the difference in the OA and F1scores makes the use of OA inappropriate as the single metric for map assessments,particularly when a data set contains imbalanced categories.Therefore,in multi-class classification,the performance of a classifier should also be measured by the average F1scores.In Fig.11,the prediction classifications for the main land-cover classes(WHE,URB,NCL,FL,and WAT)show a high degree of accuracy,especially for WHE,with precision values of more than 97%and 83%for S-2A and GF PMS,respectively.This finding shows that accurate basic data can be obtained for large areas of major crops.The OA and F1score values of the S-2A and GF PMS classification results in 2021 without training the samples were higher than 96% and 75% and than 87% and 61%,respectively,showing the transferability of the DL model in temporal and spatial terms.

Fig.11.Confusion matrix,precision,and recall of S-2A and GF PMS images for different sites with feature selection,Timm-RegNetY-320,and a patch size of 256 × 256.Diagonal values marked in red indicate numbers of correctly classified samples.The darker the color of precision and recall,the higher the accuracy value.

The accuracy of the GH and OTH classes was very low in all predictions,as was the GAR class at site D in 2020.This result can be explained by the following factors.First,the lower total pixel number of minor cover classes(GH and OTH)limited accuracy.Second,combined with the ground survey data,almost all of the greenhouses in this area are 6 or 8 m wide(Fig.8),a size below the spatial resolution of the S-2A imagery.Finally,the similar spectral characteristics of the GH,URB,and NCL cover classes result in lower accuracy classifications.In Fig.11,the precision and recall values of the GF analysis are generally lower than those of S-2A classification,whereas some minor land-cover types (e.g.,GH and OTH)were more accurately classified compared to the S-2A results.On the one hand,the more abundant spectral information of S-2A imagery permits greater classification accuracy for complex ground objects,such as GAR,NCL,and FL;yet,on the other hand,the lower spatial resolution and mixed pixels limit the classification accuracy of minor land-cover classes,such as GH and OTH.

Overall,the results of this study demonstrate the strong and stable spatial generalizability of DL models in land-use and crop mapping tasks.Because the lower classification accuracy for minor land-cover types is mostly ignored by the OA indicator in the evaluation process,this indicator should not be the only indicator used in such evaluations.

5.4.Comparison of model and classification results

In comparison to the reference results in Figs.7 and 8,the results for the eight land-cover classes show that the UNet++-based DL model performed best with a 256 × 256 patch size and with FS.Minor ground objects,such as individual greenhouses,field roads,and patchy crop covers were better recognized in the GF PMS images than in the S-2A images in Fig.8.In contrast,the classification accuracy of complex agricultural areas was higher using the S-2A imagery.Thus,multi-source images can provide more accurate classification results for ground-object monitoring.Indeed,the overall classification accuracy of the S-2A imagery was higher,and the preprocessing requirements lower,than those of the GF imagery.However,owing to the segmentation scale limitation,extracting accurate crop information in complex agricultural areas remains challenging.Moreover,even in the absence of ground samples,the prediction results and accuracy values for sites E and F in 2021 further demonstrate the advantages of the DL model in the RS classification.In addition,we infer that it is essential to up-sample small samples in an imbalanced data set to improve the training model and the accuracy of deep segmentation classification.The prediction results showed the success of the UNet++architecture based on FS and imbalanced processing,a finding attributed to its more accurate recognition relative to the other methods.The improvement of classification accuracy by these methods is consistent with the findings of Haro-García et al.[48],Xu et al.[28] and Wei et al.[49].Building on previous work [24,25,27,28],higher accuracies were achieved in this study for ground-object recognition.The experimental results described in this study demonstrate that the proposed methods can further improve classification accuracy and run time.

5.5.Limitations and future work

XGBoost was used to assess feature importance during the feature selection process.By feature grouping and importance evaluation,the number of S-2A feature bands was increased from 10 to 15,and from 4 to 9 in the case of GF PMS.However,a unified numerical standard for the feature selection scheme was not developed for the different groups.The effect of the number of selected feature bands on model performance invites further study.

According to the pixel proportions and spatial distribution of the samples,two-time up-sampling was achieved for the minor samples during the training process to address imbalanced class issues.However,according to Fig.11,the IoU values of the GH and OTH classes for each site with up-sampling in the S-2A imagery were 8.87% and 29.25%,7.82% and 44.64%,28.23% and 15.22%,and 38.00% and 0.81%,respectively,compared to 0.31%and 10.81%,0% and 5.65%,14.53% and 2.06%,and 16.71% and 0.66% without up-sampling.The IoU values of OTH class in the GF PMS images were all lower than 7%.At present,the available imbalanced processing methods include the decomposition-based approach,the synthetic minority over-sampling technique(SMOTE),and the Relief algorithm [50].Further work is needed to improve the classification accuracy of minor crop types by combining these methods.

Large-scale ground investigations and manual labeling work are needed during the critical growth phases of crops.In particular,the rich expression of ground-object information in high-resolution images raises difficulties for artificial vectorization,which limits the number of larger training and verification datasets.Finally,the DL model datasets stipulate that the image acquisition date should fall within a specific period,which requires the spectral characteristics of ground objects in satellite images to be similar to those of the training datasets.These requirements limit the application of multi-temporal images.To improve model generalization and universality,the incremental classifier and representation learning procedures employed in the DL model also invite future research attention,given the variability of crop types.

A DL modeling framework with semantic segmentation of highresolution optical satellite images has been described for land-use mapping and agricultural management.This study sought to utilize the feature indices produced by different sensors to assess model performance and improve classification accuracy.Model performance was evaluated based on the joint loss of the label smoothing cross-entropy function and Dice loss,mIoU,and training epoch time.The UNet++architecture with the Timm-RegNetY-320 backbone achieved better classification performance than other backbone options including VGG16,EfficientNet-b7,ResNet50,and Timm-ResNet101e.Based on an analysis of the OA,F1scores,and confusion matrices of the prediction results for different sites,an optimal patch size of 256 × 256 was identified for both the S-2A and GF datasets.The F1scores of the S-2A and GF predictions based on FS exceeded 71% and 54%,respectively,and outperformed the UNet,Deeplab V3+,RF,and OOC models.Thus,FS can improve DL model performance and classification accuracy when an optimal backbone and patch size are employed.

This study provides an end-to-end approach for learning generalizable feature representation across multiple regions and years.The results demonstrate that the described methods can yield valuable land-use and crop mapping products from S-2A and GF PMS images,offering a basis for the use of satellite data in agricultural practice,such as planting structure adjustment,loss estimation,and yield estimation.Compared to current conventional models,the approach described permits efficient and automatic learning of spectral features,vegetation indices,and textural features and is well suited to in-season crop and land-use classification.Indeed,the spatially generalizable model shows great potential for producing near-real-time,crop-specific cover maps for agriculture areas that lack reliable ground reference labels.

CRediT authorship contribution statement

Lijun Wang:Conceptualization,Methodology,Writing-review&editing.Jiayao Wang:Conceptualization.Zhenzhen Liu:Investigation,Data curation.Jun Zhu:Data curation,Validation.Fen Qin:Conceptualization,Supervision,Project administration,Funding acquisition.

Declaration of competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgments

This study was supported by the National Science and Technology Platform Construction (2005DKA32300),the Major Research Projects of the Ministry of Education(16JJD770019),and the Open Program of Collaborative Innovation Center of Geo-Information Technology for Smart Central Plains Henan Province (G202006).In addition,some land-use data were obtained from the Data Center of the Middle and Lower Yellow River Regions,National Earth System Science Data Center,National Science and Technology Infrastructure of China (http://henu.geodata.cn).We sincerely thank the anonymous reviewers for their constructive comments and insightful suggestions that greatly improved the quality of this manuscript.

Appendix A.Supplementary data

Supplementary data for this article can be found online at https://doi.org/10.1016/j.cj.2022.01.009.

推荐访问:model multispectral learning

本文来源:http://www.zhangdahai.com/shiyongfanwen/qitafanwen/2023/0604/607198.html